AVN: Building a Real-Time Avian Migration Tracking Network

How my team built a cross-platform mobile app that combines computer vision, sensor fusion, and gamification to create a collaborative network for tracking bird migrations in real-time.

AVN: Building a Real-Time Avian Migration Tracking Network

The Vision

For our senior capstone project sponsored by Raytheon, my team - Jorge Carbajal, Tai Nguyen, Joshua Vrana, and I - set out to solve a unique challenge in wildlife conservation: connecting individual birdwatchers into a large-scale, real-time network for tracking avian migration patterns.

Traditional birdwatching is a solitary activity that lacks real-time verification and community coordination. While platforms exist for logging sightings, none provide immediate alerts or collaborative tracking across geographic regions. We wanted to change that.

The Challenge

Research Question: How can we connect local birdwatching groups and individuals into a large-scale, real-time network for verifying and alerting avian migration sightings?

Our solution needed to meet several ambitious requirements:

System Architecture

Technology Stack

We built AVN using modern mobile and cloud technologies:

Frontend:

Backend:

Core Algorithms

The heart of AVN lies in two proprietary algorithms we developed:

AMVEA - Avian Motion Vector Estimation Algorithm

AMVEA fuses multiple smartphone sensor inputs to calculate a bird's velocity and direction in 4D space (3D position + time):

The algorithm compensates for user device movement, isolating the bird's actual motion vector from the background noise of the observer's own movements.

GSCNA - Geospatial Sighting Classification & Notification Algorithm

GSCNA handles the real-time alert distribution system:

1. Initial Alert: When a bird is detected, all users within 5km receive an immediate notification

2. Flight Path Prediction: Based on AMVEA's velocity vector, we project a 5-minute straight-line flight path (a reasonable approximation for short-term migration tracking)

3. Progressive Alerts: Users within 1km of the predicted flight path receive alerts with estimated arrival times

This creates a cascading notification system that lets birdwatchers position themselves strategically to observe and verify sightings.

Computer Vision Implementation

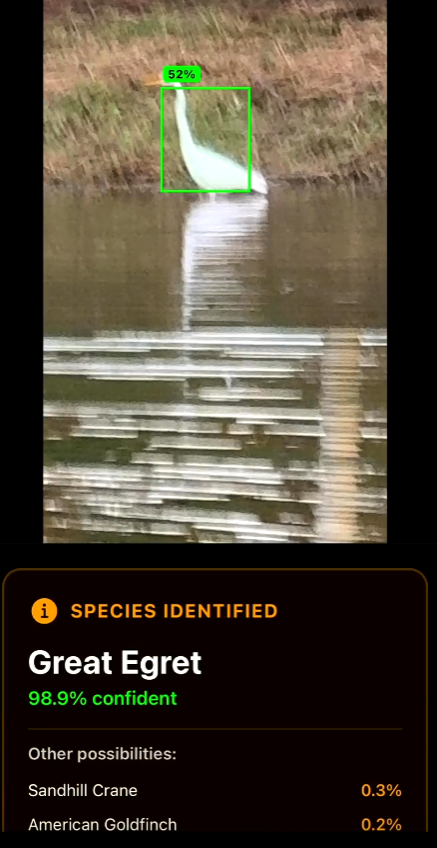

For species identification, we implemented a dual-model approach:

YOLOv5s for bird detection and bounding box generation

MobileNetV2 for species classification

This combination achieved our target accuracy while maintaining real-time performance on consumer smartphones. The models were trained on diverse datasets of North American bird species.

Gamification & User Engagement

To encourage sustained participation, we built a comprehensive gamification system:

These features transformed scientific data collection into an engaging social experience.

Development Constraints & Solutions

Time Pressure

With only one semester (6 two-week sprints), we adopted aggressive agile methodologies and parallel development tracks.

Platform Compatibility

React Native with Expo allowed us to maintain a single codebase while deploying to both iOS and Android, dramatically reducing development time.

Hardware Limitations

We couldn't rely on specialized equipment, so we maximized the capabilities of standard smartphone sensors through sophisticated sensor fusion algorithms.

Budget Constraints

By leveraging free-tier cloud services and open-source technologies, we kept infrastructure costs at zero while maintaining production-quality deployment.

Results & Impact

We successfully delivered a functional MVP that demonstrates real-time avian monitoring is achievable through mobile sensor fusion and computer vision. The system:

Technical Takeaways

Sensor Fusion is Powerful: Combining multiple imperfect sensors produces remarkably accurate motion tracking when properly calibrated and filtered.

Edge Computing Matters: Running inference on-device reduces latency and privacy concerns compared to cloud-based vision processing.

Geospatial Indexing Scales: MongoDB's geospatial queries handled real-time radius searches efficiently, even with growing datasets.

Gamification Drives Engagement: The addition of points, badges, and leaderboards significantly increased test user retention during our pilot phase.

Future Enhancements

If we were to continue development, promising directions include:

Conclusion

AVN demonstrates how modern mobile technologies can transform citizen science. By combining computer vision, sensor fusion, and social gamification, we created a platform that makes wildlife monitoring both scientifically valuable and personally rewarding.

This project showcased the power of interdisciplinary engineering—blending computer vision, embedded systems, distributed systems, and UX design—to solve real-world conservation challenges. Working with a Raytheon sponsorship and alongside talented teammates made this one of the most fulfilling projects of my academic career.

The intersection of technology and environmental science offers immense potential for positive impact, and AVN represents just one example of what's possible when we apply engineering creativity to ecological challenges.